Personally, it's easiest for me to think of KVM (Kernel-based Virtual Machine) as such a level of abstraction over Intel VT-x and AMD-V hardware virtualization technologies. We take a machine with a processor that supports one of these technologies, put Linux on this machine, install KVM in Linux, and as a result we get the opportunity to create virtual machines. This is how cloud hosting services work, for example, Amazon Web Services. Along with KVM, Xen is also sometimes used, but a discussion of this technology is beyond the scope of this post. Unlike container virtualization technologies, for example, the same Docker, KVM allows you to run any OS as a guest system, but it also has about Greater overhead for virtualization.

Note: The steps below have been tested by me on Ubuntu Linux 14.04, but in theory will be largely valid for other versions of Ubuntu and other Linux distributions. Everything should work both on the desktop and on the server, which is accessed via SSH.

KVM Installation

Check if Intel VT-x or AMD-V is supported by our processor:

grep -E "(vmx|svm)" /proc/cpuinfo

If something warms up, then it is supported, and you can proceed further.

Install KVM:

sudo apt-get update

sudo apt-get install qemu-kvm libvirt-bin virtinst-bridge-utils

Where is it customary to store:

- /var/lib/libvirt/boot/ - ISO images for installing guest systems;

- /var/lib/libvirt/images/ - hard disk images of guest systems;

- /var/log/libvirt/ - here you should look for all the logs;

- /etc/libvirt/ - directory with configuration files;

Now that KVM is installed, let's create our first virtual machine.

Creation of the first virtual machine

I chose FreeBSD as the guest system. Download the ISO image of the system:

cd /var/lib/libvirt/boot/

sudo wget http:// ftp.freebsd.org/ path/ to/ some-freebsd-disk.iso

Control virtual machines in most cases, it is done using the virsh utility:

sudo virsh --help

Before starting the virtual machine, we need to collect some additional information.

We look at the list of available networks:

sudo virsh net-list

View information about a specific network (named default):

sudo virsh net-info default

We look at the list of available optimizations for guest OS:

sudo virt-install --os-variant list

So, now we create a virtual machine with 1 CPU, 1 GB RAM and 32 GB of disk space connected to the default network:

sudo virt-install \

--virt-type=kvm \

--name freebsd10 \

--ram 1024\

--vcpus=1 \

--os-variant=freebsd8 \

--hvm\

--cdrom=/ var/ lib/ libvirt/ boot/ FreeBSD-10.2 -RELEASE-amd64-disc1.iso \

--network network=default,model=virtio \

--graphics vnc \

--disk path =/ var/ lib/ libvirt/ images/ freebsd10.img,size=32 ,bus=virtio

You can see:

WARNING Unable to connect to graphical console: virt-viewer not

installed. Please install the "virt-viewer" package.

Domain installation still in progress. You can reconnect to the console

to complete the installation process.

It's okay, that's how it should be.

Then we look at the properties of the virtual machine in XML format:

sudo virsh dumpxml freebsd10

Here is the most full information. Including, for example, there is also a MAC address, which we will need further. So far, we find information about VNC. In my case:

With the help of my favorite client (I personally use Rammina), we go via VNC, using SSH port forwarding if necessary. We get directly into the FreeBSD installer. Then everything is as usual - Next, Next, Next, we get the installed system.

Basic commands

Let's now look at the basic commands for working with KVM.

Getting a list of all virtual machines:

sudo virsh list --all

Getting information about a specific virtual machine:

sudo virsh dominfo freebsd10

Start virtual machine:

sudo virsh start freebsd10

Stop virtual machine:

sudo virsh shutdown freebsd10

Hard to beat the virtual machine (despite the name, this not deletion):

sudo virsh destroy freebsd10

Reboot the virtual machine:

sudo virsh reboot freebsd10

Clone virtual machine:

sudo virt-clone -o freebsd10 -n freebsd10-clone \

--file / var/ lib/ libvirt/ images/ freebsd10-clone.img

Enable/disable autorun:

sudo virsh autostart freebsd10

sudo virsh autostart --disable freebsd10

Run virsh in dialog mode (all commands in dialog mode - as described above):

sudovirsh

Editing the properties of the virtual machine in XML, including here you can change the limit on the amount of memory, etc.:

sudo virsh edit freebsd10

Important! Comments from the edited XML are unfortunately removed.

When the virtual machine is stopped, the disk can also be resized:

sudo qemu-img resize / var/ lib/ libvirt/ images/ freebsd10.img -2G

sudo qemu-img info / var/ lib/ libvirt/ images/ freebsd10.img

Important! Your guest OS will most likely not like the fact that the disk suddenly became larger or smaller. At best, it will boot in emergency mode with a suggestion to repartition the disk. You probably don't want to do this. It may be much easier to start a new virtual machine and migrate all the data to it.

Backup and restore are quite simple. It is enough to save the dumpxml output somewhere, as well as the disk image, and then restore them. On YouTube found a video with a demonstration of this process, everything is really easy.

Network settings

An interesting question is how to determine what IP address the virtual machine received after loading? In KVM, this is done in a clever way. I ended up writing the following Python script:

#!/usr/bin/env python3

# virt-ip.py script

# (c) 2016 Aleksander Alekseev

# http://website/

import sys

import re

import os

import subprocess

from xml .etree import ElementTree

def print(str ) :

print (str , file = sys.stderr )

if len(sys.argv )<

2

:

eprint("USAGE: " + sys.argv [ 0 ] + "

eprint("Example: " + sys .argv [ 0 ] + " freebsd10" )

sys.exit(1)

if os.geteuid() != 0 :

eprint("ERROR: you shold be root" )

eprint("Hint: run `sudo " + sys .argv [ 0 ] + " ...`" ) ;

sys.exit(1)

if subprocess .call( "which arping 2>&1 >/dev/null", shell = True ) != 0 :

eprint("ERROR: arping not found" )

print( "Hint: run `sudo apt-get install arping`")

sys.exit(1)

Domain = sys.argv [ 1 ]

if not re .match ("^*$" , domain) :

print( "ERROR: invalid characters in domain name")

sys.exit(1)

Domout = subprocess .check_output ("virsh dumpxml " +domain+" || true" ,

shell=True)

domout = domout.decode("utf-8").strip()

if domout == "" :

# error message already printed by dumpxml

sys.exit(1)

Doc = ElementTree.fromstring(domout)

# 1. list all network interfaces

# 2. run `arping` on every interface in parallel

#3 grep replies

cmd= "(ifconfig | cut -d " " -f 1 | grep -E "." | " + \

"xargs -P0 -I IFACE arping -i IFACE -c 1 () 2>&1 | " + \

"grep "bytes from") || true"

for child in doc.iter() :

if child.tag == "mac" :

macaddr = child.attrib["address"]

macout = subprocess .check_output(cmd .format(macaddr) ,

shell=True)

print(macout.decode("utf-8" ) )

The script works with both the default network and the bridged network, the configuration of which we will discuss later. However, in practice, it is much more convenient to configure KVM so that it always assigns the same IP addresses to guests. To do this, edit the network settings:

sudo virsh net-edit default

... something like this:

After making these changes

>

...and replace it with something like:

>

We reboot the guest system and check that it received an IP via DHCP from the router. If you want the guest system to have static IP address, this is configured normally within the guest itself.

virt-manager program

You may also be interested in the virt-manager program:

sudo apt-get install virt-manager

sudo usermod -a -G libvirtd USERNAME

This is how its main window looks like:

As you can see, virt-manager is not only a GUI for virtual machines running locally. With it, you can manage virtual machines running on other hosts, as well as look at beautiful graphics in real time. I personally find it especially convenient in virt-manager that you do not need to look through the configs on which port the VNC of a particular guest system is running. You just find the virtual machine in the list, double-click, and you get access to the monitor.

It's also very convenient to do things with virt-manager that would otherwise require laborious editing of XML files and, in some cases, executing additional commands. For example, renaming virtual machines, setting up CPU affinity, and similar things. By the way, the use of CPU affinity significantly reduces the effect of noisy neighbors and the influence of virtual machines on the host system. Always use it whenever possible.

If you decide to use KVM as a replacement for VirtualBox, please note that they cannot share hardware virtualization. In order for KVM to work on your desktop, you will not only have to stop all virtual machines in VirtualBox and Vagrant, but also reboot the system. I personally find KVM much more convenient than VirtualBox, at least because it doesn't require you to run the command sudo /sbin/rcvboxdrv setup after each kernel update, it works adequately with Unity, and generally allows you to hide all the windows.

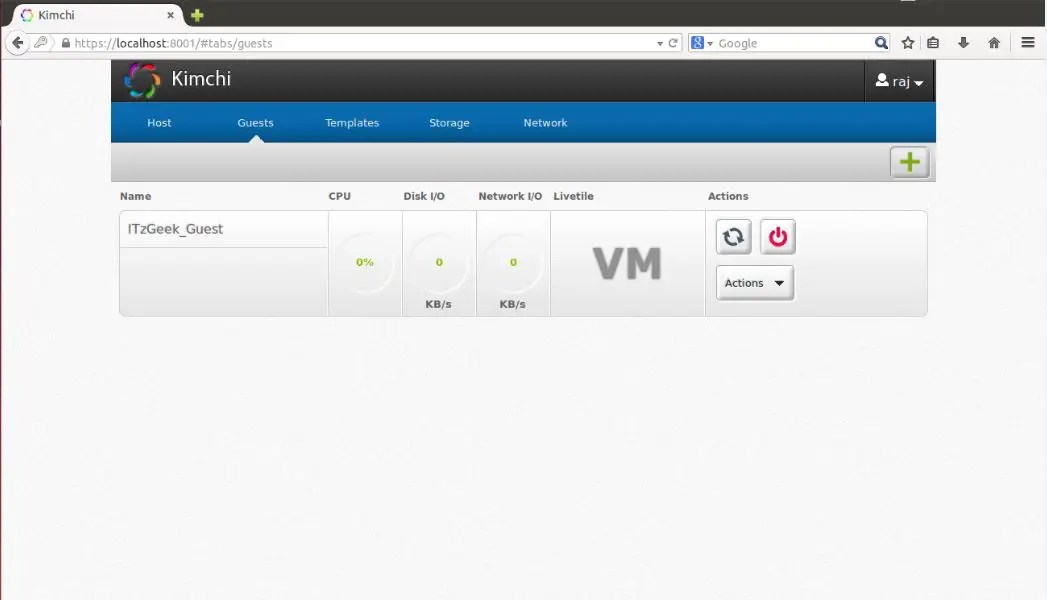

Kimchi is a HTML5 based web interface for KVM. It provides an easy and flexible interface to create and manage a guest virtual machine. Kimchi is installed and runs as a daemon on the KVM host. It manages KVM guests with the help of libvirt. Kimchi interface supports all latest version of the browsers with -1 version, it also supports mobile browsers.

Kimchi can be installed on latest version of RHEL, Fedora, openSUSE and Ubuntu. In this guide, i used Ubuntu 14.10 as a KVM host.

Before configuring kimchi, you must install the following dependent packages.

$ sudo apt-get install gcc make autoconf automake gettext git python-cherrypy3 python-cheetah python-libvirt libvirt-bin python-imaging python-pam python-m2crypto python-jsonschema qemu-kvm libtool python-psutil python-ethtool sosreport python-ipaddr python-ldap python-lxml nfs-common open-iscsi lvm2 xsltproc python-parted nginx firewalld python-guestfs libguestfs-tools python-requests websockify novnc spice-html5 wget

System would ask you the following details during the installation of packages.

1. OK on Postfix configuration.

2. Select Internet Site on general type of mail configuration.

3. Type your FQDN and then select OK.

Once installed, download the latest version of kimchi from github.

$ wget https://github.com/kimchi-project/kimchi/archive/master.zip

Unzip the downloaded file.

$ unzip master.zip $ cd kimchi-master/

Build kimchi using the following command.

$ ./autogen.sh --system

$ make $ sudo make install # Optional if running from the source tree

$ sudo kimchid --host=0.0.0.0

Access kimchi using web browser, https://localhost:8001. You would be asked to login, use your system credential which you normally use for login to system.

Once you logged in, you will get a page like below. This show the list of running guest virtual machines on current host, you have buttons to perform shutdown, restart and connect to console by clicking on action.

To create a new guest machine, click on the + sign in the right corner. If you use this option to create a machine, it will be done through templates.

You can manage templates by visiting templates menu. To create a new template, click on the + sign in the right corner. You can create a template using ISO images, you can place iso images on /var/lib/kimchi/isos or use remote one.

You can manage storage pool by going to storage menu, there you can add a new storage by clicking on + sign. It supports adding NFS, iSCSI and SCSI fiber channel storage.

Network can be managed by going to network menu, you can create a new network with isolated, NAT and bridged networking.

Today, many tasks that were traditionally assigned to several physical servers are being transferred to virtual environments. Virtualization technologies are also in demand by software developers, since they allow comprehensive testing of applications in various operating systems. At the same time, simplifying many issues, virtualization systems themselves need to be managed, and one cannot do without special solutions.

Vagrant

The VirtualBox virtual machine is deservedly popular among administrators and developers, allowing you to quickly create the necessary environments using a graphical interface or command line interface. If the number of VMs does not exceed three, there are no difficulties in deployment and management, but modern projects tend to acquire configurations, and as a result, a very complex infrastructure is obtained, which becomes difficult to handle. This is the problem that the Vagrant virtual environment manager is designed to solve, allowing you to create copies of virtual machines with a predefined configuration and dynamically reallocate VM resources (Provisioning) as needed. In the basic distribution, Vagrant works with VirtualBox, but the plugin system allows you to connect another virtualization system. Today, the plugin code for AWS and Rackspace Cloud is open, and a plugin is available for commercial subscription to support VMware Fusion / Workstation.

Vagrant does not create a virtual machine from scratch. For convenience, the project offers several base images (boxes), which are imported and subsequently used for quick deployment of the system, already on the basis of boxes, a guest OS is assembled with the desired configuration.

Chef and Puppet are pre-installed in boxes to simplify application deployment. Besides, desired settings can be set using the shell. The environments include a complete set for running and developing applications in Ruby. SSH is used to access the VM, it is possible to exchange files through a shared directory.

Written by Vagrant using Ruby, it can be installed on any platform for which there are VirtualBox and Ruby components. Packages for Windows, Linux (deb and rpm) and OS X are available on the download page.

The process of installing and using Ubuntu is simple. Download the VirtualBox and Vagrant packages and install:

$ sudo dpkg -i virtualbox-4.2.10_amd64.deb $ sudo dpkg -i vagrant_1.2.2_x86_64.deb

At the time of writing, with the latest current version VirtualBox 4.2.14 had problems running Vagrant, so it's best to use 4.2.12 or test 4.2.15 for now. Alternatively, you can do:

$ cd ~/.vagrant.d/boxes/BoxName/virtualbox $ openssl sha1 *.vmdk *.ovf > box.mf

I will bring Alternative option Vagrant installations - using Ruby:

$ sudo apt-get install ruby1.8 ruby1.8-dev rubygems1.8 $ sudo gem install vagrant

All project settings are made in special file Vagrantfile. In order not to create a template manually, it can be generated as follows:

$ mkdir project $ cd project $ vagrant init

Now you can look into the created configuration file and fill in: VM settings (config.vm. ), SSH connection options (config.ssh.), the parameters of Vagrant itself (config.vagrant). All of them are well documented, the meaning of some is clear and without explanation.

In fact, several such files are used at startup, each subsequent one overrides the previous one: built into Vagrant (it cannot be changed), supplied with boxes (packed using the "--vagrantfile" switch), located in ~/.vagrant.d and the project file . This approach allows you to use the default settings, overriding only what is necessary in a particular project.

All installations are done using the vagrant command, the list of available keys can be viewed using "-h". After installation, we do not have a single image, running vagrant box list will display an empty list. The finished box can be in a local file system or on remote server, its name is set as a parameter, which will be used in projects. For example, we use the official Box Ubuntu 12.04 LTS offered by Vagrant developers.

$ vagrant box add precise64 http://files.vagrantup.com/precise64.box

Now it can be accessed from Vagrantfile:

config.vm.box = "precise64"

Although it’s easier to specify it right away when initializing the project:

$ vagrant init precise64

The easiest way, which does not require learning Chef and Puppet, is to use standard shell commands to configure the VM, which can be written directly in the Vagrantfile or, even better, combined into a script that is connected like this:

Vagrant.configure("2") do |config| config.vm.provision:shell, :inline => "script.sh" end

Now all the commands specified in script.sh will be executed when the VM starts up. When starting the project, an ovf file is created, its settings can be viewed using the VirtualBox GUI or the VBoxManage command:

$ VBoxManage import /home/user/.vagrant.d/boxes/precise64/virtualbox/box.ovf Virtual system 0: 0: Suggested OS type: "Ubuntu_64" (change with "--vsys 0 --ostype

They are not always satisfied. given conditions, but using the provider's settings, you can easily change the settings of a particular VM (see "change with ..." hints):

Config.vm.provider:virtualbox do |vb| vb.customize ["modifyvm", :id, "--memory", "1024"] end

Launch and connect to the system via SSH:

$ vagrant up $ vagrant ssh

To stop the VM, use the halt or destroy option (the second one will clear all files, the next time all operations will be performed from the beginning), if you need to send it to hibernation - vagrant suspend , return - vagrant resume . For an example of working with Chef, you can use a ready-made recipe with which to configure APT and Apache2:

Config.vm.provision:chef_solo do |chef| chef.recipe_url = "http://files.vagrantup.com/getting_started/cookbooks.tar.gz" chef.add_recipe("vagrant_main") end

To access the VM "from the outside", you will need to configure port forwarding. By default, forwarding 22 -> 2222 is performed, allowing you to connect via SSH. Add to Vagrantfile:

Vagrant::Config.run do |config| config.vm.forward_port 80, 1111 end

The web server can now be accessed by navigating to http://127.0.0.1:1111/. In order not to configure the environment every time, it is better to build a ready-made package based on it.

$ vagrant package --vagrantfile Vagrantfile --output project.box

Now the project.box file can be distributed to other administrators, developers, or ordinary users who will include it using the vagrant box add project.box command.

Convirt

Xen/KVM virtualization systems released under free licenses do not have a user-friendly interface, which is often interpreted not in their favor. However, this shortcoming is easily remedied. ConVirt allows you to deploy virtual machines across multiple Xen and KVM servers with just one click, using an easy-to-use interface. All necessary operations with virtual machines are available: start, stop, create snapshots, control and redistribute resources, connect to a VM via VNC, automate administration tasks. Ajax technology makes the interface interactive and similar to a desktop application. For example, a VM can be simply dragged from one server to another. The interface is not localized, but the controls are intuitive.

Server pooling gives you the ability to configure and manage virtual machines and resources at the server pool level, rather than a single server. Agents are not installed on virtual systems, only the convirt-tool package on the physical server is needed. This simplifies administration and deployment.

Once a new server is added, ConVirt will automatically collect its configuration and performance data, providing summary information at several levels - from a single virtual machine, a physical server, to the entire pool. The collected data is used to automatically host new guest systems. This information is also displayed in the form of visual graphs.

To create virtual machines, templates are used - descriptions of virtual machine settings, containing data on allocated resources, the path to OS files, and additional settings. Several options are available after installation. ready-made templates, but if necessary, they are easy to create yourself.

All technologies are supported: load balancing, hot migration, virtual disks with growing capacity, allowing you to use resources as needed, and many other features implemented in Xen and KVM. You don't need to stop the VM to reallocate resources.

Implemented the ability to manage a virtual environment for several administrators with the ability to audit and control their actions.

ConVirt is developed by the Convirture company, using the open core concept (open basis), when only a basic set of functions is freely distributed with the source texts, the rest is available in the commercial version. The open source version lacks High Availability support, VLAN integration, redundancy and recovery, command line management, notifications, and official support.

During development, the TurboGears2 framework, ExtJs and FLOT libraries were used, MySQL was used for storing information, dnsmasq was used as a DHCP and DNS server. The required package can be found in the repositories of popular Linux distributions.

Karesansui

All the features for managing virtual environments have been implemented: installing the OS, creating configurations for the disk subsystem and virtual network cards, managing quotas, replication, freezing VMs, creating snapshots, viewing detailed statistics and log data, monitoring downloads. From one console, you can manage multiple physical servers and the virtual machines hosted on them. Multi-user work with separation of rights is possible. As a result, the developers managed to implement a virtual environment in the browser that allows you to fully manage the systems.

Written by Karesansui in Python, SQLite is used as a DBMS for a single-node system. If you plan to manage Karesansui installations hosted on multiple physical servers, MySQL or PostgreSQL should be used.

You can deploy Karesansui on any Linux. The developers themselves prefer CentOS (for which the site has detailed instructions), although Karesansui does well on Debian and Ubuntu. Before installation, you must fulfill all the dependencies indicated in the documentation. Next, the installation script is launched and the database is initialized. If a multi-server configuration is used, then you just need to specify an external database.

Subsequent work fully compensates for the inconvenience of installation. All settings are divided into seven tabs, the purpose of which is clear from the name: Guest, Settings, Job, Network, Storage, Report and Log. Depending on the user's role, not all of them will be available to him.

You can create a new VM from a local ISO file or by specifying an HTTP/FTP resource with installation images. You will also need to set other attributes: the system name that will be displayed in the list, network name (hostname), virtualization technology (Xen or KVM), RAM and hard disk size (Memory Size and Disk Size) - and select a picture that will match the virtual OS, making it fast visual choice in the console.

WebVirtMgr

The capabilities of the described solutions are often redundant, and their installation is not always clear to an administrator with little experience. But there is a way out here too. The WebVirtMgr virtual machine centralized management service was created as a simple replacement for virt-manager, which will provide comfortable work with a VM using a browser with a Java plugin installed. KVM settings management is supported: creation, installation, configuration, launch of VM, snapshots and backup of virtual machines. Provides management of the network pool and storage pool, working with ISO, cloning images, viewing CPU and RAM usage. The virtual machine is accessed via VNC. All transactions are recorded in logs. With a single installation of WebVirtMgr, you can manage multiple KVM servers. RPC libvirt (TCP/16509) or SSH is used to connect to them.

The interface is written in Python/Django. You will need a Linux server to install. Distributed in source and RPM packages for CentOS, RHEL, Fedora and Oracle Linux 6. The deployment process itself is simple and well described in the project documentation (in Russian), you just need to configure libvirt and install webvirtmgr. The whole process takes five minutes. After connecting to the Dashboard, select Add Connection and specify the node parameters, then we can configure the VM.

Scripting the creation of a VM

The simplest script for creating and running a virtual machine using VirtualBox:

#!/bin/bash vmname="debian01" VBoxManage createvm --name $(vmname) --ostype "Debian" --register VBoxManage modifyvm $(vmname) --memory 512 --acpi on --boot1 dvd VBoxManage createhd - -filename "$(vmname).vdi" --size 10000 --variant Fixed VBoxManage storagectl $(vmname) --name "IDE Controller" --add ide --controller PIIX4 VBoxManage storageattach $(vmname) --storagectl "IDE Controller" --port 0 --device 0 --type hdd --medium "$(vmname).vdi" VBoxManage storageattach $(vmname) --storagectl "IDE Controller" --port 0 --device 1 --type dvddrive --medium /iso/debian-7.1.0-i386-netinst.iso VBoxManage modifyvm $(vmname) --nic1 bridged --bridgeadapter1 eth0 --cableconnected1 on VBoxManage modifyvm $(vmname) --vrde on screen VBoxHeadless --startvm $(vmname)

Proxmox VE

The previous solutions are good for those situations where there is already some infrastructure. But if you only have to deploy it, you should think about specialized platforms that allow you to quickly get the desired result. An example here is Proxmox Virtual Environment, which is a Linux distribution (based on Debian 7.0 Wheezy) that allows you to quickly build an infrastructure virtual servers using OpenVZ and KVM and is practically on par with such products as VMware vSphere, MS Hyper-V and Citrix XenServer.

In fact, the system should only be installed (a couple of simple steps), everything else already works out of the box. Then, using the web interface, you can create a VM. For this purpose, the easiest way is to use OpenVZ templates and containers, which are loaded from external resources directly from the interface with one click (if manually, then copy to the /var/lib/vz/template directory). But templates can also be created by cloning already created systems in the linking mode. This option saves disk space because all linked environments use only one shared copy of the reference template data without duplicating information. The interface is localized and understandable, you do not experience any particular inconvenience when working with it.

There is support for clusters, tools for backing up virtual environments, it is possible to migrate VM between nodes without stopping work. Access control to existing objects (VM, storage, nodes) is implemented on the basis of roles, various authentication mechanisms are supported (AD, LDAP, Linux PAM, built-in Proxmox VE). The web interface provides the ability to access the VM using VNC and SSH consoles, you can view job status, logs, monitoring data and much more. True, some operations specific to HA systems will still have to be performed the old fashioned way in the console, such as creating an authorized iSCSI connection, configuring a cluster, creating multipath, and some other operations.

System requirements are low: x64 CPU (preferably with Intel VT/AMD-V), 1+ GB RAM. The project offers a ready-made ISO image and repository for Debian.

Conclusion

All the solutions described are good in their own way and do an excellent job with the tasks. You just need to choose the most suitable for a particular situation.

On Ubuntu, it is recommended to use the KVM hypervisor (virtual machine manager) and the libvirt library as a tool to manage it. Libvirt includes a set of software APIs and custom virtual machine (VM) management applications virt-manager (graphical user interface, GUI) or virsh (command line, CLI). As alternative managers, you can use convirt (GUI) or convirt2 (WEB interface).

Currently, only the KVM hypervisor is officially supported in Ubuntu. This hypervisor is part of the operating system kernel code. Linux systems. Unlike Xen, KVM does not support paravirtualization, which means that in order to use it, your CPU must support VT technologies. You can check if your processor supports this technology by running the command in the terminal:

If the result is a message:

INFO: /dev/kvm exists KVM acceleration can be used

so KVM will work without problems.

If the output is a message:

Your CPU does not support KVM extensions KVM acceleration can NOT be used

then you can still use the virtual machine, but it will be much slower.

Install as guest 64-bit systems

Allocate more than 2 GB of RAM to guests

Installation

sudo apt-get install qemu-kvm libvirt-bin ubuntu-vm-builder bridge-utils

This is an installation on a server without X, i.e. does not include a graphical interface. You can install it with the command

sudo apt-get install virt-manager

After that, the “Virtual Machine Manager” item will appear in the menu and, with a high degree of probability, everything will work. If any problems still arise, then you will need to read the instructions in the English-language wiki.

Create a guest system

The procedure for creating a guest system using the graphical interface is quite simple.

But the text mode can be described.

qcow2

When creating a system using a graphical interface as hard drive you are prompted to either select an existing image file or block device, or create a new file with raw (RAW) data. However, this is far from the only available file format. Of all the disk types listed in man qemu-img, qcow2 is the most flexible and up-to-date. It supports snapshots, encryption and compression. It must be created before creating a new guest system.

Qemu-img create -o preallocation=metadata -f qcow2 qcow2.img 20G

According to the same man qemu-img , metadata preallocation (-o preallocation=metadata) makes the disk slightly larger initially, but provides better performance when the image needs to grow. In fact, in this case, this option avoids an unpleasant bug. Created image initially takes up less than a megabyte of space and grows to the specified size as needed. The guest system should immediately see this final specified size, however, during the installation phase, it can see the actual size of the file. Naturally, it will refuse to be installed on a 200 kb hard drive. The bug is not specific to Ubuntu, it also appears in RHEL, at least.

In addition to the type of image, you can later choose how to connect it - IDE, SCSI or Virtio Disk. The performance of the disk subsystem will depend on this choice. There is no unambiguously correct answer, you need to choose based on the task that will be assigned to the guest system. If the guest system is created "to see", then any method will do. In general, I / O is usually the bottleneck of a virtual machine, so when creating a highly loaded system, this issue should be taken as responsibly as possible.

I am writing this note to demonstrate step by step installation and setting up a Linux virtual machine based on KVM. Earlier, I already wrote about virtualization, where I used the wonderful .

Now I'm faced with the question of rent good server with large volume random access memory and large hard drive. But I don’t want to run projects directly on the host machine, so I will separate them into separate small virtual servers with Linux OS or docker containers (I’ll talk about them in another article).

All modern cloud hostings work on the same principle, i. a hoster on good hardware raises a bunch of virtual servers, which we used to call VPS / VDS, and distributes them to users, or automates this process (hello, DigitalOcean).

KVM (kernel-based virtual machine) is Linux software that uses x86-compatible processor hardware to work with Intel VT/AMD SVM virtualization technology.

KVM Installation

I will carry out all the machinations for creating a virtual machine on Ubuntu 16.04.1 LTS OS. To check if your processes support hardware virtualization on Intel-based VT/AMD SVM, execute:

Grep -E "(vmx|svm)" /proc/cpuinfo

If the terminal is not empty, then everything is in order and KVM can be installed. Ubuntu only officially supports the KVM hypervisor (part of the Linux kernel) and advises using the libvirt library as a tool to manage it, which we will do next.

You can also check hardware virtualization support in Ubuntu through the command:

If successful, you will see something like this:

INFO: /dev/kvm exists KVM acceleration can be used

Install packages for working with KVM:

sudo apt-get install qemu-kvm libvirt-bin ubuntu-vm-builder bridge-utils

If you have access to the graphical shell of the system, then you can install the libvirt GUI manager:

sudo apt-get install virt-manager

Using virt-manager is quite simple (no more difficult than VirtualBox), so this article will focus on the console version of installing and configuring a virtual server.

Installing and configuring a virtual server

In the console version of installation, configuration and system management, the virsh utility (an add-on for the libvirt library) is an indispensable tool. It has a large number of options and parameters, detailed description can be obtained like this:

man virsh

or call the standard "help":

Virsh help

I always adhere to the following rules when working with virtual servers:

- I store iso OS images in the /var/lib/libvirt/boot directory

- I store virtual machine images in the /var/lib/libvirt/images directory

- I explicitly assign a static IP address to each new virtual machine through the hypervisor's DHCP server.

Let's start installing the first virtual machine (64-bit server ubunt 16.04 LTS):

Cd /var/lib/libvirt/boot sudo wget http://releases.ubuntu.com/16.04/ubuntu-16.04.1-desktop-amd64.iso

After downloading the image, run the installation:

sudo virt-install \ --virt-type=kvm \ --name ubuntu1604\ --ram 1024 \ --vcpus=1 \ --os-variant=ubuntu16.04 \ --hvm \ --cdrom=/var/ lib/libvirt/boot/ubuntu-16.04.1-server-amd64.iso \ --network network=default,model=virtio \ --graphics vnc \ --disk path=/var/lib/libvirt/images/ubuntu1604. img,size=20,bus=virtio

Translating all these parameters into "human language", it turns out that we are creating a virtual machine with Ubuntu 16.04 OS, 1024 MB of RAM, 1 processor, a standard network card (the virtual machine will go to the Internet as if due to NAT), 20 GB HDD.

It is worth paying attention to the parameter --os-variant, it tells the hypervisor under which operating system the settings should be adapted.

A list of available OS options can be obtained by running the command:

osinfo query os

If there is no such utility on your system, then install:

sudo apt-get install libosinfo-bin

After starting the installation, the following message will appear in the console:

Domain installation still in progress. You can reconnect to the console to complete the installation process.

This is a normal situation, we will continue the installation through VNC.

We look at which port it was raised from our virtual machine (in a nearby terminal, for example):

Virsh dumpxml ubuntu1604 ...

Port 5900, at local address 127.0.0.1. To connect to VNC, you need to use Port Forwarding over ssh. Before doing this, make sure tcp forwarding is enabled on the ssh daemon. To do this, go to the sshd settings:

Cat /etc/ssh/sshd_config | grep AllowTcpForwarding

If nothing was found or you see:

AllowTcpForwarding no

Then we edit the config for

AllowTcpForwarding yes

and reboot sshd.

Configuring port forwarding

Run the command on the local machine:

Ssh -fN -l login -L 127.0.0.1:5900:localhost:5900 server_ip

Here we have configured ssh port forwarding from local port 5900 to server port 5900. Now you can connect to VNC using any VNC client. I prefer UltraVNC because of its simplicity and convenience.

After a successful connection, the standard Ubuntu installation welcome window will be displayed on the screen:

After the installation is complete and the usual reboot, a login window will appear. After logging in, we determine the IP address of the newly minted virtual machine in order to later make it static:

ifconfig

We remember and go to the host machine. We pull out the mac-address of the "network" card of the virtual machine:

Virsh dumpxml ubuntu1604 | grep "mac address"

Remember our mac address:

Editing the network settings of the hypervisor:

sudo virsh net-edit default

We are looking for DHCP, and add this:

You should get something like this:

In order for the settings to take effect, you must restart the DHCP server of the hypervisor:

sudo virsh net-destroy default sudo virsh net-start default sudo service libvirt-bin restart

After that, we reboot the virtual machine, now it will always have the IP address assigned to it - 192.168.122.131.

There are other ways to set a static IP for a virtual machine, for example, by directly editing the network settings inside the guest system, but here it’s up to your heart’s content. I just showed the option that I myself prefer to use.

To connect to the terminal of the virtual machine, run:

ssh 192.168.122.131

The car is ready for battle.

Virsh: command list

To see running virtual hosts (all available can be obtained by adding --all):

sudo virsh list

You can restart the host:

Sudo virsh reboot $VM_NAME

Stop the virtual machine:

sudo virsh stop $VM_NAME

Execute halt:

sudo virsh destroy $VM_NAME

sudo virsh start $VM_NAME

Shutdown:

sudo virsh shutdown $VM_NAME

Add to autorun:

sudo virsh autostart $VM_NAME

Very often it is required to clone the system in order to use it as a framework for other virtual operating systems in the future; for this, the virt-clone utility is used.

Virt-clone --help

It clones an existing VM and changes host-sensitive data like mac address. Passwords, files and other user-specific information in the clone remains the same. If the cloned virtual machine had an IP address manually entered, then there may be problems with SSH access to the clone due to a conflict (2 hosts with the same IP).

In addition to installing a virtual machine via VNC, it is also possible to use X11Forwarding via the virt-manager utility. On Windows, for example, Xming and PuTTY can be used for this.